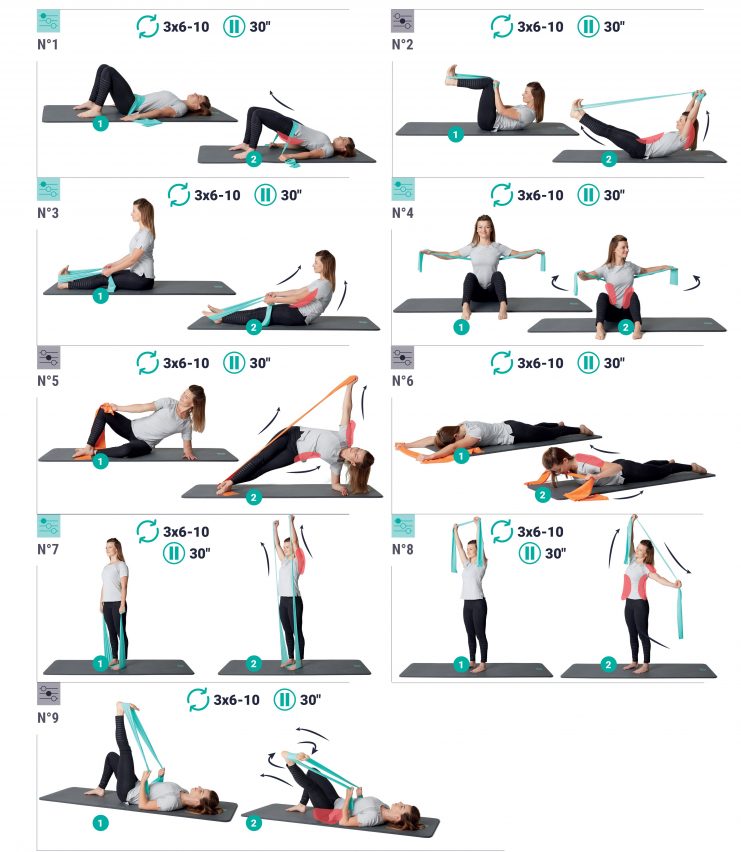

Текстилни Ластици за Тренировка GYM777, Безплатна Е-Книга с 50 Упражнения, Фитнес, Йога, Пилатес, Комплект Х3, 8-27 кг. | Ластици за тренировка GYM777

Анкер За Врата С Карабинер PrimaFIT За Упражнения И Тренировки С Фитнес Тренировъчни Ластици - eMAG.bg

Ластик за Тренировкa, Фитнес, Набирания, Видео със 111 Упражнения,27кг гр. София Света Троица • OLX.bg

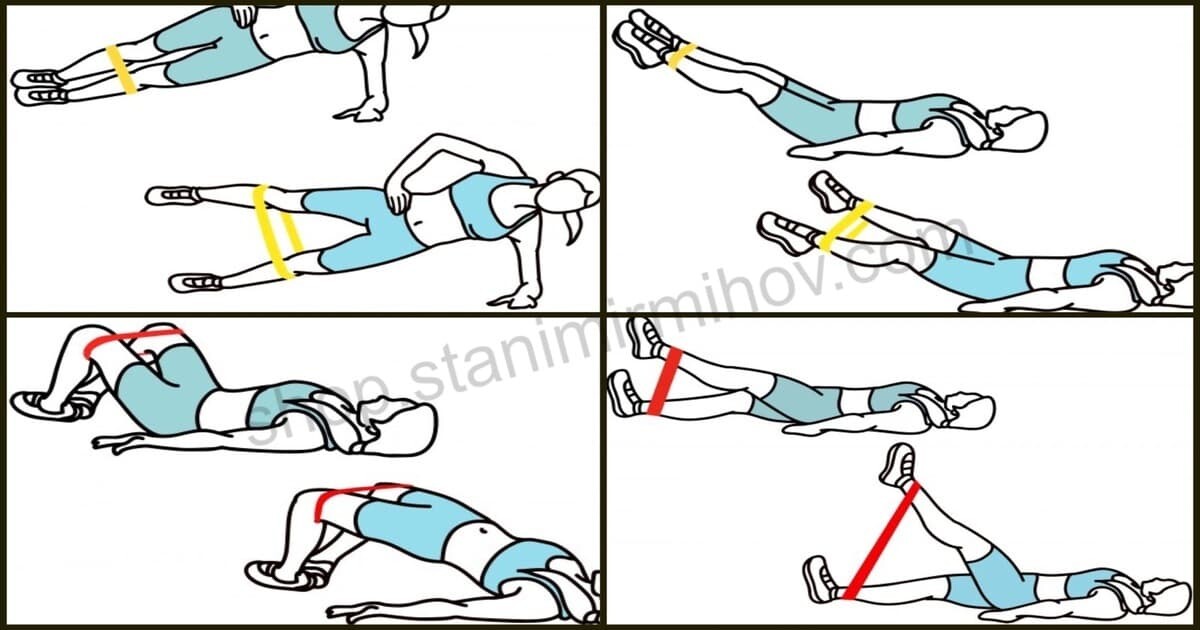

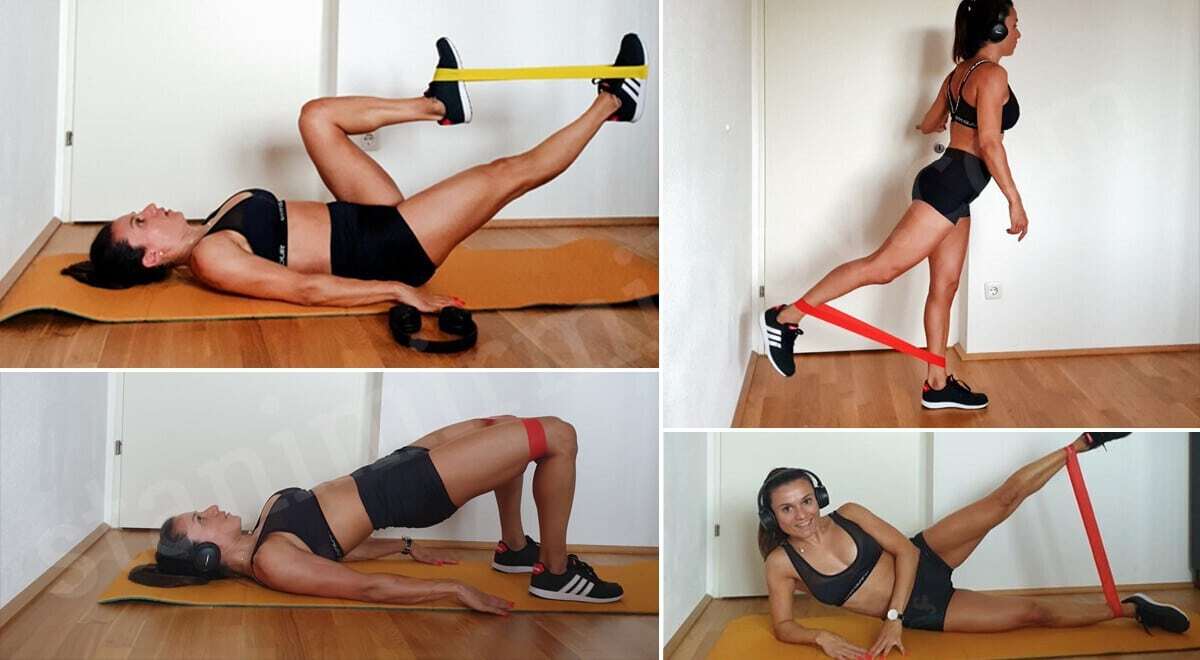

Фитнес Ластици Stanimir Mihov, Комплект от 5 броя с различно съпротивление, Онлайн видео с Топ упражнения за Крака, Бедра, 2-19 кг, Ленти, Многоцветни - eMAG.bg