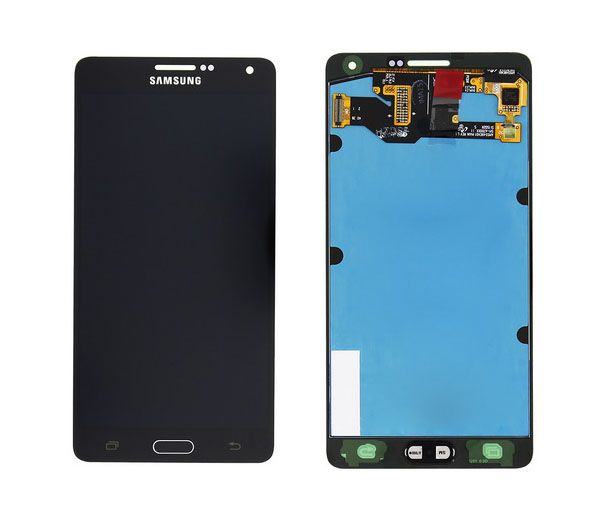

Samsung Galaxy A7 2015 midnight black (dark blue), Mobile Phones & Gadgets, Mobile Phones, Android Phones, Samsung on Carousell

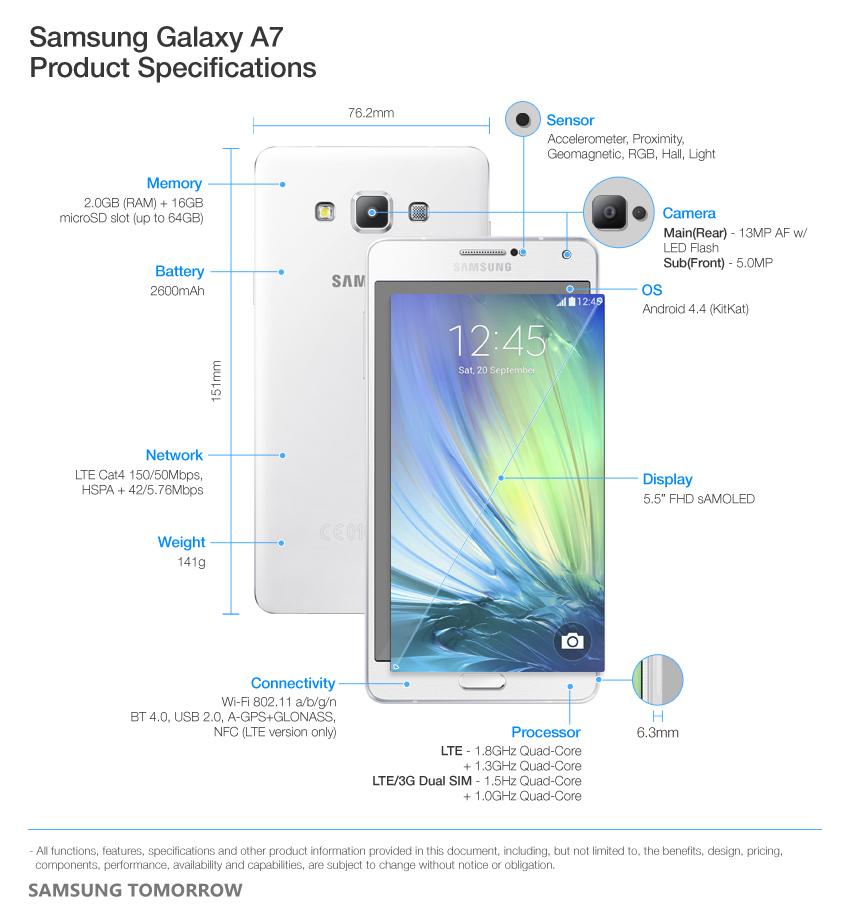

Android Infotech on X: "Root Samsung Galaxy A7 2015 SM-A700F/FD/H/K Marshmallow 6.0.1 using TWRP. #samsunggalaxy #a72015 https://t.co/BCVcXJA0Ne https://t.co/SvUAj7GNdo" / X

New Battery for Samsung Galaxy A7 (2015) SM-A700H SM-A700F SM-A700FD EB-BE700ABE Original High Quality | Lazada PH

Samsung Galaxy A7 (2015), 16GB, 4G, BRAND NEW, Champagne Gold, FACTORY UNLOCKED, Midnight Black, Pearl White, Samsung Galaxy A7 (2015), Samsung Galaxy A7 SM-A700F 16GB (Midnight Black), SINGLE SIM, SM-A700F | KICKmobiles®